These days it’s not a new thing that people are slowly giving up on traditional search engines like Google, Bing, or DuckDuckGo. For most of us, “searching the internet” has always meant “Googling” something (duh, doesn’t internet search basically mean Google search?). But now things are shifting.

People are moving fast towards AI search engines like Perplexity, Google’s AI Overviews, and others. Before the ChatGPT wave, if you wanted an answer, you typed a query, went through ten or twenty pages, opened countless tabs, and compared notes. Every website showed a slightly different version of the story, and somewhere in that mix you figured out your own opinion. It wasn’t efficient, but at least you got different sides of the coin.

Now? Nobody has the patience. AI does all that boring work in seconds and spits out a neat answer. You can even ask follow-up questions like you’re talking to a friend. Of course it saves time.

But here’s the problem: saving time doesn’t mean the information is always trustworthy.

What AI search is actually doing

AI search engines don’t own anything of their own (just like Google doesn’t actually own content, it just ranks it). They’re trained on whatever is available online, plus whatever extra data their companies feed them. And guess what type of content these models love the most? User Generated Content.

Not the polished stuff on official blogs, but raw, unfiltered posts from forums like Reddit, Quora, and Stack Overflow etc. Real people talking, real experiences being shared. That’s why you’ll often see AI citing those sites because that’s where the “authentic” voices are.

At least, that’s the idea.

The messy reality

Companies aren’t dumb. They know AI scrapes content from forums. So what do they do? They send in their marketing teams to flood those same forums with “honest reviews” (read: fake ones written by interns). Suddenly, every product on Reddit has glowing feedback, every service on Quora is the “absolute best,” and every Stack Overflow thread conveniently pushes a certain tool.

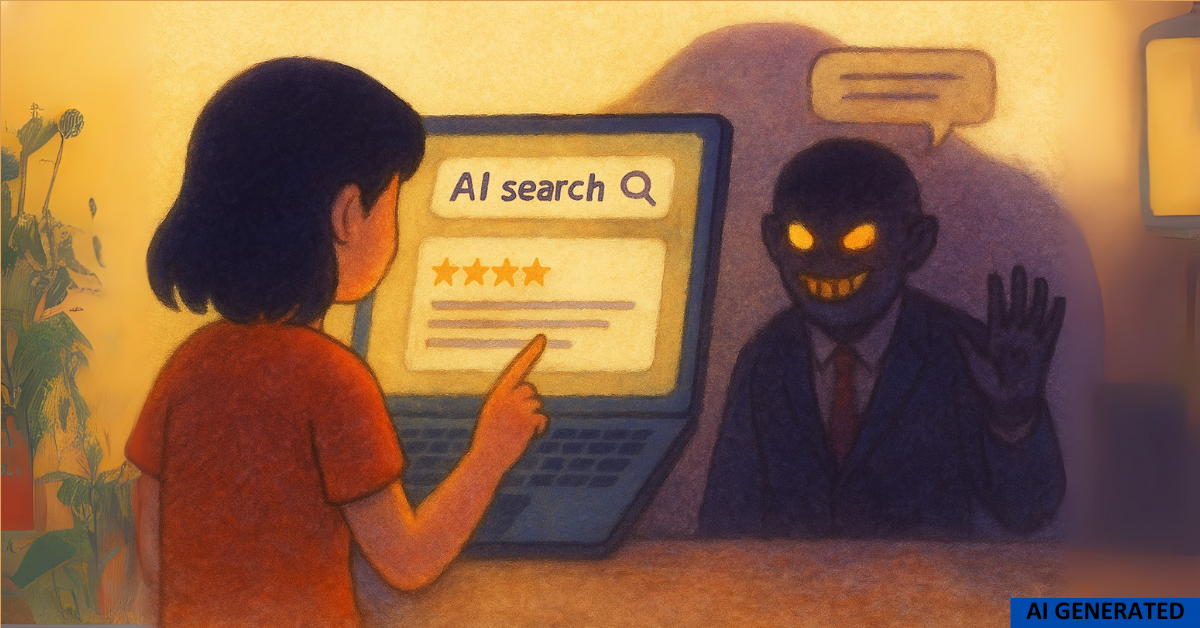

Now imagine what happens when you ask an AI search engine, “What’s the best phone under 30k?” Instead of hours of research, you get a confident summary which just happens to pull heavily from those manipulated Reddit comments. AI won’t tell you, “By the way, this was written by a brand rep disguised as a college student.” It just delivers it as fact.

And the scary part? AI models right now can’t really tell the difference between a genuine review and a planted one.

Why it’s a big deal

Recent surveys suggest that a growing number of Gen Z prefer AI tools and social platforms over Google for searches. For example, a lot of Gen Z users openly say they’d rather ask ChatGPT or Perplexity than “Google it.” Even product searches, health questions, and career advice are moving to AI. It feels faster, smarter, and easier until you realize the source of truth may not be truth at all.

In the old days, when you Googled something, at least you got ten different websites. You could compare, cross-check, and spot the odd one out. Now with AI, most of the time you get one clean answer, and people take it at face value. If that answer is based on manipulated user-generated content, then what you’re actually consuming is not knowledge it’s marketing in disguise.

The bottom line

AI search engines are convenient, no doubt. They’re faster than scrolling through 20 sites, less messy than juggling tabs, and smarter in conversation. But they’re also vulnerable to manipulation because of where they pull their information from. And if companies keep flooding user-generated platforms with polished fake content, the line between real opinion and paid promotion will blur even further.

So yes, AI might save us time. But if we stop questioning what it shows us, we risk living in an internet where the “truth” isn’t truth at all it’s just whatever was marketed the hardest.

P.S: Chatbots can save time with quick answers, but when it comes to reviews and services, remember they often echo biased or manipulated content. Quick answers? Yes. Honest reviews? Not always.